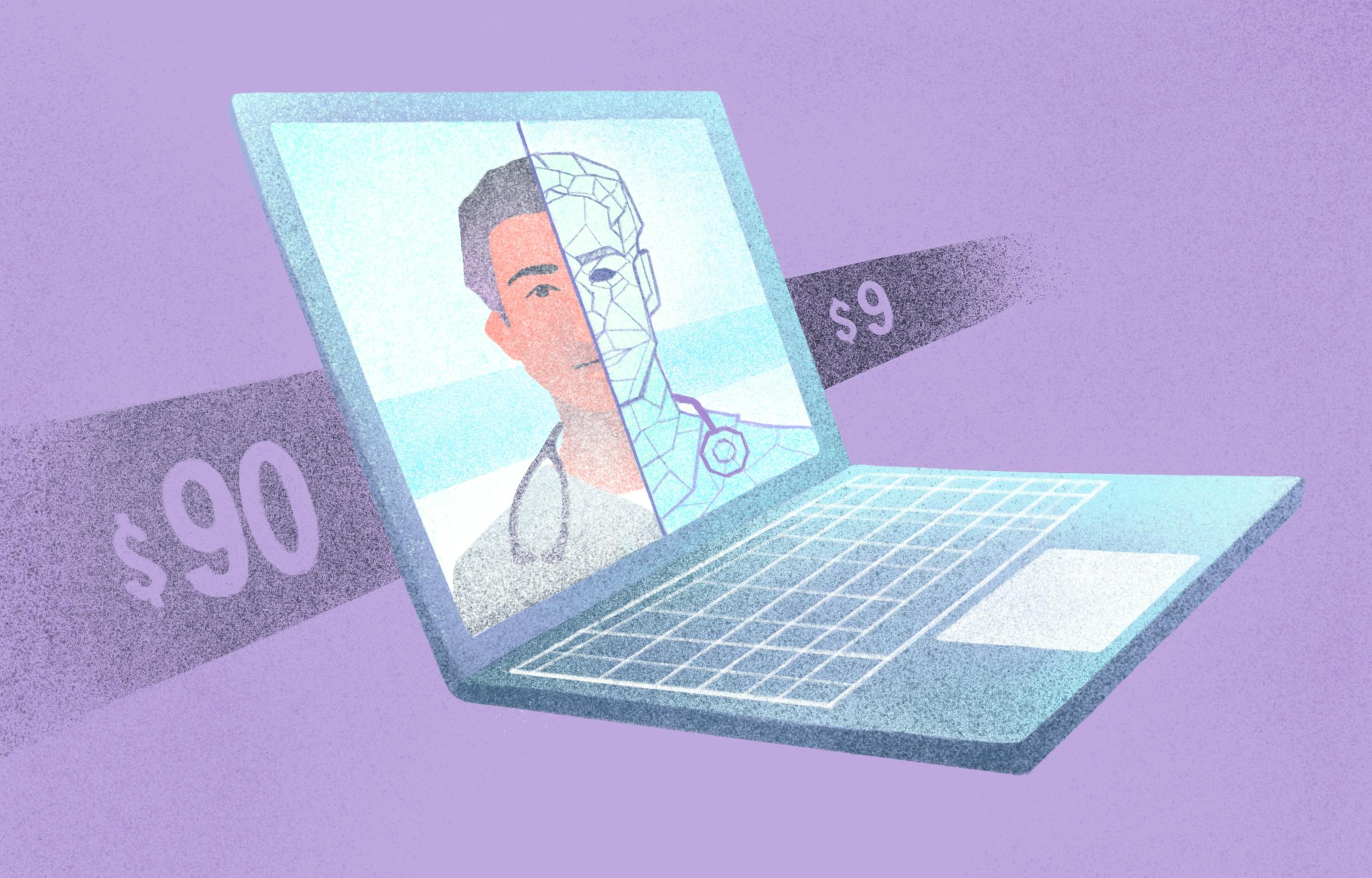

$9 AI “nurses”? Inside the NVIDIA-Hippocratic AI collaboration

Of the many healthcare AI initiatives announced by NVIDIA at their GTC AI conference this month, one sticks out as particularly controversial: a collaboration with medical AI startup Hippocratic AI to create AI nurses.

The generative AI nurses, who consult with patients in real-time over video call, will be available for $9 per hour, ten percent of the cost of many human nurses.

On one hand, the end-goal of this project could stand to save the healthcare industry—and patients—a lot of time and money. On the other hand, we may be jumping the gun too soon to think generative AI can effectively replace human nurses.

Let’s dig in to what the project is really about and what proponents and critics are saying about it.

GenAI in clinical care

When a patient gets on a call with a Hippocratic AI nurse, they see a human-like figure on their screen. The AI nurse can then verbally guide the patient on actions like follow-up care or their prescribed drug regimen, all the while promising to report back to their (human) healthcare provider.

The AI can’t make diagnoses, but they can engage human patients, acting as a reassuring link between patients and providers.

Hippocratic also doesn’t aim to replace all nurses—this is part of why the startup’s GenAI figures are called “agents”—but to help bridge the gap between staff shortages. These are the same staff shortages behind the many nursing strikes we saw last year.

“Voice-based digital agents powered by generative AI can usher in an age of abundance in healthcare, but only if the technology responds to patients as a human would,” said Kimberly Powell, vice president of Healthcare at NVIDIA.

These AI nurses—or agents—aren’t the only AI-based care tool in the headlines recently. Forward’s self-service primary care CarePods come to mind—as do the triage chatbots rolling out in clinics like Amazon-owned One Medical.

Yet, Hippocratic’s product captures the imagination because of the direct human nurse comparison that the company itself boldly makes.

Is healthcare ready to automate human-to-human care?

According to Hippocratic AI, yes. The startup claims its GenAI nurses outperform human nurses on bedside manner and education—only slightly underperforming on satisfaction surveys.

The company also emphasizes safety, with the words “safety-first” preceding many references to their GenAI product on their website. This ethos of safety is also part of their name, with “Hippocratic” referring to the healthcare provider’s oath, where they promise to “first, do no harm.”

And again, the agents aren’t taking over full care duties in Hippocratic’s plans. They step up as virtual assistants, automating points of contact that perhaps don’t need a human touch.

But the question of where a human touch is necessary is where things get controversial. After all, nurses are seen by many as “the most trusted profession.” Might automating away even some of their points of contact erode that trust for patients?

Reactions to the news from the public and healthcare professionals has been mixed. Some have worried about that eroding trust, while others have praised the opportunity to lower human nurses’ workloads and cut costs.

The question remains: How will patients react as NVIDIA and Hippocratic roll out their product to healthcare systems?

Human checks and balances can make clinical AI work for us

As the AI revolution has marched on, panicked predictions that AI would come for everyone’s jobs have not (yet) panned out.

Some aspects of administrative and cultural production are being increasingly automated, but the so-called “care economy” has continued to prove resilient to automation. GenAI may pass licensing exams, but it can’t provide the care that human providers do.

That being said, automation does make aspects of work and production more economically feasible—lowering costs. And in perhaps no industry is lowering costs more urgent than in healthcare.

So while automation encroaching on clinicians’ jobs is understandably alarming, we must develop ways to make this technology work for us—to empower clinicians and patients, not frighten and threaten them.

Regulation will likely play a big role in building that trust. As will frameworks for maintaining human oversight in AI systems. This is why many healthcare AI companies have made promises to keep humans “in the loop” as a check on clinical AI, preventing potentially harmful errors.

Yet, how we keep those humans in the loop is an open question.

“Human in the loop could mean different things for different startups,” said Boston University data scientist Elaine Nsoesie, a faculty affiliate at the Distributed AI Research Institute. “It could mean that humans provide the training data for algorithms or that humans assess outputs, make the final decision, or help an algorithm accomplish a task that it cannot effectively accomplish. There isn’t a single interpretation of the phrase.”

In the meantime, we can hope (and call) for greater transparency from clinical AI companies. When patient safety is at stake, we cannot just rely on their word.